Mastering AB Testing: A Guide to Data-Driven Product Decisions

Learn how AB testing drives smarter product decisions. Explore real AB testing examples, improve conversion rates, and find the right method for your team.

.png)

AB testing is a fundamental methodology that empowers product teams to make data-driven decisions rather than relying on intuition. At its core, it involves comparing two variations of a digital experience to see which one performs better against a specific goal. This practice strips away the guesswork from product development, allowing teams to validate hypotheses with actual user behavior.

As a product professional, your primary goal is to deliver value to the user while driving business outcomes. Relying on assumptions is a fast track to wasted engineering effort and stagnant metrics. By systematically exposing different segments of your audience to varied experiences, you gain quantitative proof of what actually resonates with your target market.

In this article, we will explore the critical components of successful experimentation and how you can apply them to your product strategy. We will cover practical execution methods, ways to measure success, and how to know when to pivot your approach when classic testing is not the right fit.

Real-World AB Testing Examples for Teams

To truly grasp the power of experimentation, it is helpful to look at practical AB testing examples that drive tangible business results. Product teams constantly generate new AB testing ideas, ranging from simple visual changes to complex algorithmic adjustments. However, the most impactful tests always start with a strong, measurable hypothesis. For instance, an e-commerce team might hypothesize that removing the navigation bar during checkout will reduce distractions and decrease cart abandonment. By routing 50% of traffic to the standard checkout and 50% to the simplified version, they can measure the exact impact on their completion rate. Other common tests involve experimenting with pricing page structures, altering the tone of copy in push notifications, or changing friction points during a sign-up flow. The goal is never to just test randomly, but to isolate variables that directly influence user decision-making.

At Product People, we frequently step into organizations where the experimentation culture needs a strategic overhaul to produce meaningful outcomes. We use our first-hand experience to guide teams away from vanity metrics and toward tests that move the needle. A great example of this is when we worked with a mobility company facing bottlenecks in user onboarding. We needed to optimize a complex verification funnel where users were dropping off before completing their profiles. By carefully structuring targeted variations in the user interface and messaging, we successfully helped WeShare (now Miles) increase new user verification conversion rates. We managed this entirely while the company was navigating a massive internal re-organization. The key to our success was strictly defining the success criteria upfront and ensuring we had enough traffic volume to reach statistical significance quickly.

When you are building your own backlog of experiments, focus on areas of your product with high traffic and high drop-off rates. These zones offer the highest potential return on investment for your testing efforts. It is also highly recommended to document every test, win or lose, in a centralized repository. According to foundational research on controlled experiments on the web, a significant portion of ideas fail to improve the baseline metric. Embracing these "failures" as vital learning opportunities is what ultimately drives long-term product success and innovation.

Driving Higher AB Testing Conversion

Measuring AB testing conversion accurately is the most critical technical challenge product managers face during the experimentation process. It is not enough to simply launch two variants and pick the one with a slightly higher success rate after a few days. You must ensure your data is robust, clean, and statistically significant. This requires a deep understanding of concepts like the minimum detectable effect (MDE), baseline conversion rates, and the necessary sample size. If you end a test prematurely because one variant looks like it is winning, you risk falling victim to false positives. To avoid this, product teams must calculate the required duration of the test before it even begins and strictly adhere to that timeline.

Understanding user segments is another major factor in improving your experiment outcomes. A variant might perform poorly across your overall user base but show incredible success with a specific cohort, such as returning mobile users. Digging into these secondary metrics can uncover hidden value and inform future feature development. According to a comprehensive A/B testing insights report, successful experimentation programs rely heavily on granular post-test analysis. You must look beyond the primary objective and monitor guardrail metrics to ensure your new feature isn't cannibalizing engagement in other parts of the application.

We have seen the importance of rigorous tracking firsthand across numerous client engagements. When we provided interim product managers to support the central listings team at ImmoScout24, setting up reliable conversion tracking was paramount. We had to ensure that every variation was mapped correctly to downstream business events. Here are a few critical steps we implement to maintain data integrity during tests:

- Verify tracking events in staging environments before any traffic allocation begins.

- Run A/A tests periodically to check for systemic biases in your experimentation platform.

- Establish clear guardrail metrics, such as page load speed or error rates, that trigger an automatic shutdown if they degrade.

- Ensure all stakeholders agree on the primary success metric before the test goes live.

Evaluating AB Testing Alternatives

While classic experimentation is the gold standard for causal inference, there are times when you must explore AB testing alternatives to gather the insights you need. Not every product or feature has the necessary traffic volume to reach statistical significance in a reasonable timeframe. B2B software, for example, often suffers from low sample sizes compared to massive B2C platforms. In these scenarios, waiting months for a test to conclude will severely throttle your product velocity. Furthermore, classic tests are designed to compare distinct variations, but they are not well-suited for open-ended exploration or answering the fundamental question of why users are behaving a certain way.

When you face these limitations, you have to pivot to other research and validation methodologies. Here are several viable alternatives that product teams can deploy depending on their specific constraints:

- Multivariate Testing (MVT): Best used when you have massive amounts of traffic and want to test multiple variables simultaneously (like a headline, image, and button color) to find the perfect combination.

- Multi-Armed Bandits: Instead of waiting for a test to end, bandit algorithms dynamically allocate more traffic to the winning variation in real-time. Advanced experimental frameworks often rely on this approach to minimize the opportunity cost of showing a subpar experience.

- Fake Door Tests: If you want to validate demand for a feature before building it, you can place a button or banner in the product. When users click, they see a message that the feature is coming soon, allowing you to gauge intent without writing complex backend code.

- Usability Testing: For low-traffic environments or highly complex workflow changes, qualitative methods like moderated user interviews provide deep insights into user friction that quantitative data cannot match.

By understanding the strengths and weaknesses of different validation methods, product managers can build a more versatile toolkit. The ultimate goal is never to force an experiment where it does not fit, but to choose the research method that provides the highest confidence with the least amount of effort.

FAQs

Conclusion

Building a strong culture of experimentation is one of the highest-leverage activities a product team can undertake. It shifts organizational conversations away from subjective opinions and grounds them in observable user truth.

As you implement these practices, remember that a failed test is still a successful learning opportunity. The faster you can invalidate incorrect assumptions, the faster you can pivot toward features that truly drive value and growth for your product.

Read More Posts

Customer Journey: Optimize Digital Experiences Effectively

Unlocking the Synergy of UI and UX Design for Product Success

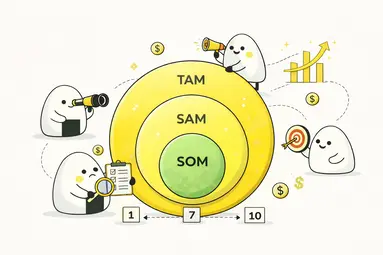

Master the Market: A Guide to TAM SAM SOM for Product Leaders

.webp)

The Comprehensive Guide to How NPS is Calculated for Product Growth

.webp)

Understanding DAU Meaning: A Guide to Tracking Daily Active Users

.webp)